Big Technology Podcast: Claude Code’s Shining Moment, ChatGPT for Healthcare, End Of Busywork?

Episode webpage: https://www.bigtechnology.com/

Big Technology Podcast: Claude Code’s Shining Moment, ChatGPT for Healthcare, End Of Busywork?

Episode webpage: https://www.bigtechnology.com/

In 2026, could I publish 10 apps to 4 app stores and manage all the security and features to build a profitable small business? At least 5 of the apps are paid. Translate all the screens into 26 languages.

Why? Given this is 40 versions of production apps, it will push the limits of what a solo entrepreneur can manage given the superpowers now enabled by AI tools such as Antigravity and Claude Code.

The 4 app stores I will publish is on iOS App Store, Mac App Store, Windows Store and Google PlayStore

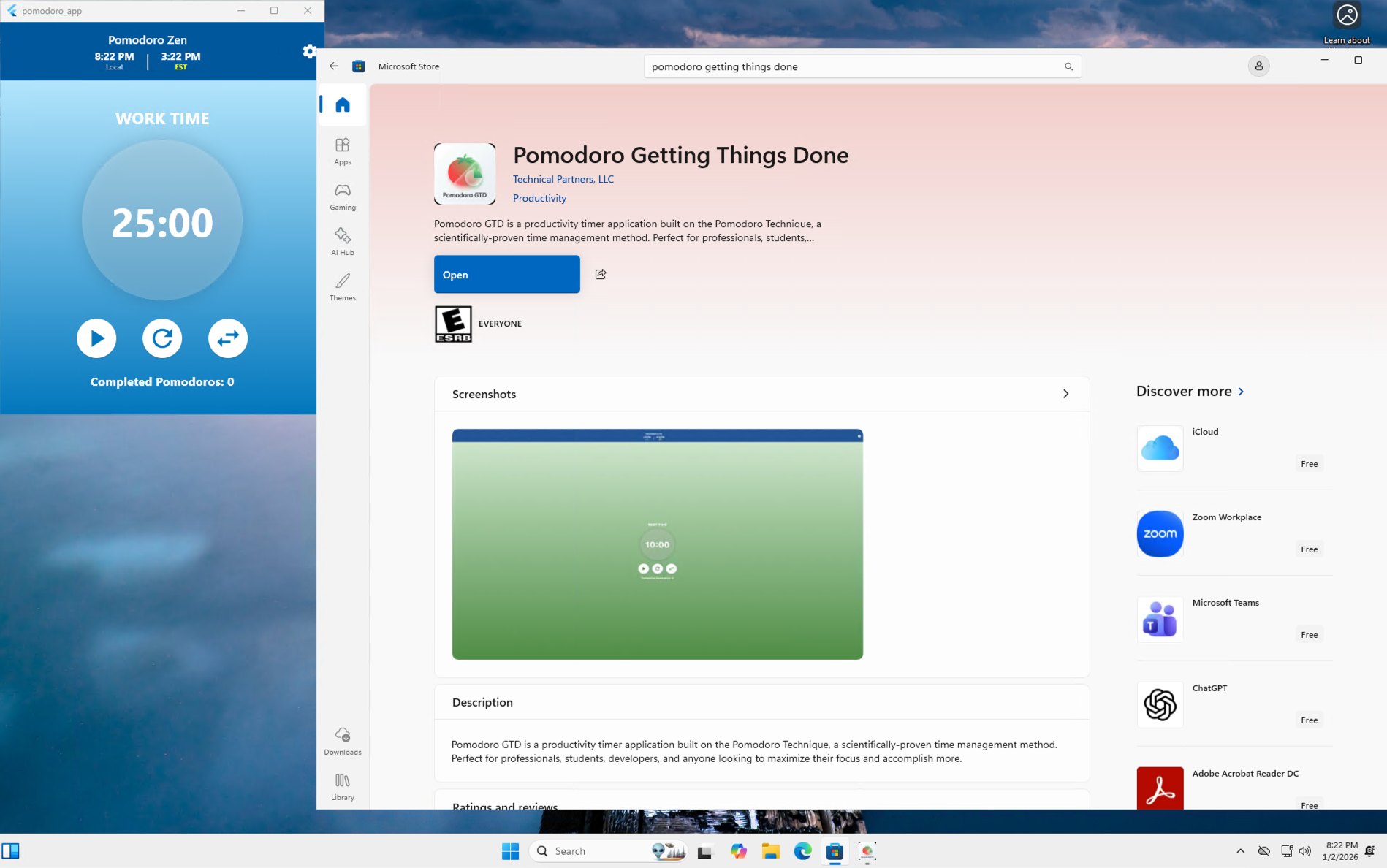

Jan 2nd, 2026 – Published my first app on Windows.

Here are my learnings

Install this simple Pomodoro app

https://pmc.ncbi.nlm.nih.gov/articles/PMC11278271/

Sternberg RJ. Do Not Worry That Generative AI May Compromise Human Creativity or Intelligence in the Future: It Already Has. J Intell. 2024 Jul 19;12(7):69. doi: 10.3390/jintelligence12070069. PMID: 39057189; PMCID: PMC11278271.

The history of digital computation has been dominated by the paradigm of general-purpose processing. For nearly half a century, the Central Processing Unit (CPU) served as the universal engine of the information age. This universality was its greatest strength and, eventually, its critical weakness. As the mid-2010s approached, the tech industry faced a collision of two tectonic trends: the deceleration of Moore’s Law and the explosive growth of Deep Learning (DL).

This report provides an exhaustive analysis of Google’s response to this collision: the Tensor Processing Unit (TPU). It traces the lineage of the TPU from clandestine experiments on “coffee table” servers to the deployment of the Exascale-class TPU v7 “Ironwood.” It explores the architectural philosophy of Domain Specific Architectures (DSAs) championed by Turing Award winner David Patterson, arguing that the future of computing lies not in doing everything reasonably well, but in doing one thing—matrix multiplication—with absolute efficiency.

Through a detailed examination of seven generations of hardware, this report illustrates how the TPU enabled the modern AI revolution, powering everything from AlphaGo to the Gemini and PaLM models. It details technical specifications, the shift to liquid cooling, the introduction of optical interconnects, and the “Age of Inference” ushered in by Ironwood. The analysis suggests that the TPU is a vertically integrated supercomputing instrument that allowed Google to decouple its AI ambitions from the constraints of the merchant silicon market.

To understand the genesis of the TPU, one must understand the physical constraints facing machine learning pioneers in the early 2010s. Before the era of polished cloud infrastructure, the hardware reality for deep learning researchers was chaotic and improvised.

In 2012, Zak Stone—who would later found the Cloud TPU program—operated in a startup environment that necessitated extreme frugality. To acquire the necessary compute power for training early neural networks, Stone and his co-founders resorted to purchasing used gaming GPUs from online marketplaces. They assembled these disparate components into servers resting on their living room coffee table. The setup was so power-inefficient that turning on a household microwave would frequently trip the circuit breakers, plunging their makeshift data center into darkness.1

This “coffee table” era serves as a potent metaphor for the state of the industry. The hardware being used—GPUs designed for rendering video game textures—was accidentally good at the parallel math required for AI, but it was not optimized for it.

By 2013, deep learning was moving from academic curiosity to product necessity. Jeff Dean, Google’s Chief Scientist, performed a calculation that would become legendary in the annals of computer architecture. He estimated the computational load if Google’s user base of 100 million Android users utilized the voice-to-text feature for a mere three minutes per day. The results were stark: supporting this single feature would require doubling the number of data centers Google owned globally.1

This was an economic and logistical impossibility. Building a data center is a multi-year, multi-billion dollar capital expenditure. The projection revealed an existential threat: if AI was the future of Google services, the existing hardware trajectory (CPUs) and the alternative (GPUs) were insufficient.

Faced with this “compute cliff,” Google initiated a covert hardware project in 2013 to build a custom Application-Specific Integrated Circuit (ASIC) that could accelerate machine learning inference by an order of magnitude.4

Led by Norm Jouppi, a distinguished hardware architect known for his work on the MIPS processor, the team operated on a frantic 15-month timeline.4 They prioritized speed over perfection, shipping the first silicon to data centers without fixing known bugs, relying instead on software patches. The chip was packaged as an accelerator card that fit into the SATA hard drive slots of Google’s standard servers, allowing for rapid deployment without redesigning server racks.4

For nearly two years, these chips—the TPU v1—ran in secret, powering Google Search, Translate, and the AlphaGo system that defeated Lee Sedol in 2016, all while the outside world remained oblivious.3

The TPU v1 was a domain-specific accelerator designed strictly for inference.

The defining feature of the TPU is the Matrix Multiply Unit (MXU) based on a Systolic Array. Unlike a CPU, which constantly reads and writes to registers (the “fetch-decode-execute-writeback” cycle), a systolic array flows data through a grid of Multiplier-Accumulators (MACs) in a rhythmic pulse.

In the TPU v1, this array consisted of 256 x 256 MACs.6

The result:

The TPU v1 aggressively used Quantization, operating on 8-bit integers (INT8) rather than the standard 32-bit floating-point numbers.6 This decision quadrupled memory bandwidth and significantly reduced energy consumption, as an 8-bit integer addition consumes roughly 13x less energy than a 16-bit floating-point addition.7

When published in 2017, the specifications revealed a processor radically specialized compared to its contemporaries.

| Feature | TPU v1 | NVIDIA K80 GPU | Intel Haswell CPU |

|---|---|---|---|

| Primary Workload | Inference (INT8) | Training/Graphics (FP32) | General Purpose |

| Peak Performance | 92 TOPS (8-bit) | 2.8 TOPS (8-bit) | 1.3 TOPS (8-bit) |

| Power Consumption | ~40W (Busy) | ~300W | ~145W |

| Clock Speed | 700 MHz | ~560-875 MHz | 2.3 GHz+ |

| On-Chip Memory | 28 MiB (Unified Buffer) | Shared Cache | L1/L2/L3 Caches |

Data compiled from.4

The TPU v1 achieved 92 TeraOps/second (TOPS) while consuming only 40 Watts, providing a 15x to 30x speedup in inference and a 30x to 80x improvement in energy efficiency (performance/Watt) compared to contemporary CPUs and GPUs.6

The technical success of the TPU v1 validated the theories of David Patterson, a Turing Award winner who joined Google as a Distinguished Engineer in 2016.8

Patterson argued that Moore’s Law (transistor density) and Dennard Scaling (power density) were failing. Consequently, the only path to significant performance gains—10x or 100x—was through Domain Specific Architectures (DSAs).10

The TPU is the archetypal DSA. By removing “general purpose” features like branch prediction and out-of-order execution, the TPU devotes nearly all its silicon to arithmetic. Patterson noted that for the TPU v1, the instruction set was CISC (Complex Instruction Set Computer), sending complex commands over the PCIe bus to avoid bottlenecking the host CPU.6

To free itself from NVIDIA GPUs, Google needed a chip capable of training, which requires higher precision (floating point) and backpropagation.

Introduced in 2017, the TPU v2 was a supercomputing node featuring:

Google researchers invented the bfloat16 (Brain Floating Point) format for TPU v2. By truncating the mantissa of a 32-bit float but keeping the 8-bit exponent, they achieved the numerical stability of FP32 with the speed and memory density of FP16.14 This format has since become an industry standard.

The TPU v3 pushed peak compute to 123 TFLOPS per chip.15 However, the power density was too high for air cooling. Google introduced liquid cooling directly to the chip, allowing v3 Pods to scale to 1,024 chips and deliver over 100 PetaFLOPS.16

For the Large Language Model (LLM) era, Google needed exascale capabilities.

TPU v4 introduced Optical Circuit Switches (OCS). Instead of electrical switching, OCS uses MEMS mirrors to reflect light beams, reconfiguring the network topology on the fly (e.g., from 3D Mesh to Twisted Torus).18 This allowed v4 Pods to scale to 4,096 chips and 1.1 exaflops of peak compute.18

To accelerate recommendation models (DLRMs), which rely on massive embedding tables, TPU v4 introduced the SparseCore. These dataflow processors accelerated embeddings by 5x-7x while occupying only 5% of the die area.19

The v4 Pods were used to train PaLM (Pathways Language Model) across two Pods simultaneously, achieving a hardware utilization efficiency of 57.8%.20

In 2023, Google bifurcated the TPU line to address different market economics.

Announced in May 2024, Trillium (TPU v6e) focused on the “Memory Wall.”

In April 2025, Google unveiled its most ambitious silicon to date: TPU v7, codenamed Ironwood. While previous generations chased training performance, Ironwood was explicitly architected for the “Age of Inference” and agentic workflows.

Ironwood represents a massive leap in raw throughput and memory density, designed to hold massive “Chain of Thought” reasoning models in memory.

A single Ironwood pod can scale to 9,216 chips.14 Google claims Ironwood delivers 2x the performance per watt compared to the already efficient Trillium (v6e). This efficiency is critical as data centers face power constraints; Ironwood allows Google to deploy agentic models that “think” for seconds or minutes (inference-heavy workloads) without blowing out power budgets.

David Patterson and Jeff Dean championed the metric of Compute Carbon Intensity (CCI).22 Their research highlights that the vertical integration of the TPU—including liquid cooling and OCS—reduces the carbon footprint of AI. TPU v4, for instance, reduced CO2e emissions by roughly 20x compared to contemporary DSAs in typical data centers.20

| Feature | TPU v1 (2015) | TPU v4 (2020) | TPU v5p (2023) | TPU v6e (2024) | TPU v7 (2025) |

|---|---|---|---|---|---|

| Codename | – | Pufferfish | – | Trillium | Ironwood |

| Use Case | Inference | Training | LLM Training | Efficient Training | Agentic Inference |

| TFLOPS | 0.092 (INT8) | 275 (BF16) | 459 (BF16) | 918 (BF16) | ~2,300 (BF16) |

| HBM | – | 32 GB | 95 GB | 32 GB | 192 GB |

| Bandwidth | 34 GB/s | 1,200 GB/s | 2,765 GB/s | 1,600 GB/s | 7,400 GB/s |

| Pod Size | N/A | 4,096 | 8,960 | 256 | 9,216 |

Table compiled from 6, 15, 21.

The TPU is not merely a chip; it is a “Silicon Sovereign.” From the coffee table to the Ironwood pod, Google has successfully decoupled its AI destiny from the merchant market, building a machine that spans from the atom to the algorithm.

This blog post was written by Claude Code after I tutored a student who needed to manage 5 repositories that were all needed for a the same project.

If you’re building a cross-platform application—say, a mobile game available on Android, iOS, and web—you’ve likely faced a common architectural dilemma: how do you organize your codebase?

Each platform has its own distinct requirements:

These platforms often demand separate repositories because:

But here’s the problem: how do you work across all these repositories efficiently?

The most common approach is to keep each platform in its own repository and check them out separately:

~/projects/myapp-android/ ~/projects/myapp-ios/ ~/projects/myapp-web/ ~/projects/myapp-backend/

Downsides:

cd-ing between different directoriesA clever workaround is to create a “meta” folder with symlinks to each repository:

~/projects/myapp-meta/ ├── android -> ../../myapp-android/ ├── ios -> ../../myapp-ios/ ├── web -> ../../myapp-web/ └── backend -> ../../myapp-backend/

This is better, but still has issues:

Git submodules solve this problem by allowing you to embed one Git repository inside another while maintaining their independence. Think of it as a “pointer” from your parent repository to specific commits in child repositories.

Here’s how the structure looks:

myapp-meta/ # Parent repository ├── .gitmodules # Submodule configuration ├── android/ # Submodule → myapp-android repo ├── ios/ # Submodule → myapp-ios repo ├── web/ # Submodule → myapp-web repo └── backend/ # Submodule → myapp-backend repo

git clone --recursiveIf you’re using Claude Code (Anthropic’s AI coding assistant), git submodules provide an exceptional workflow enhancement:

When Claude Code operates in a parent repository with submodules, it can:

For example, if you ask Claude to “update the answer validation logic across all platforms,” it can read and modify code in android/, ios/, and web/ subdirectories—all while understanding they’re separate Git repositories.

Claude Code reads CLAUDE.md instruction files to understand project-specific context. With submodules:

myapp-meta/

├── CLAUDE.md # High-level project overview

├── android/

│ └── CLAUDE.md # Android-specific build commands

├── ios/

│ └── CLAUDE.md # iOS-specific setup instructions

└── backend/

└── CLAUDE.md # Backend architecture details

Claude Code can reference the parent CLAUDE.md for project-wide context, then dive into platform-specific CLAUDE.md files when working in submodules. This creates a natural documentation hierarchy.

Claude Code can handle complex workflows like:

# Claude can navigate to each submodule and create feature branches cd android && git checkout -b feature/new-scoring cd ../ios && git checkout -b feature/new-scoring cd ../web && git checkout -b feature/new-scoring

When you ask Claude to “implement a new scoring system across all platforms,” it can:

Tools like grep and find work naturally across the unified directory structure. When Claude searches for “validation algorithm” implementations, it finds them across all platforms without needing to search multiple separate checkouts.

This approach was born from real experience managing a cross-platform game project with:

Before git submodules (using symlinks):

After git submodules:

git clone --recursive and everything just works# Create and initialize the meta repository mkdir myapp-meta cd myapp-meta git init # Create a README echo "# MyApp Meta Repository" > README.md echo "This repository contains all platform repositories as submodules." >> README.md git add README.md git commit -m "Initial commit: meta-repository"

# Add each platform repository as a submodule git submodule add https://github.com/yourname/myapp-android.git android git submodule add https://github.com/yourname/myapp-ios.git ios git submodule add https://github.com/yourname/myapp-web.git web git submodule add https://github.com/yourname/myapp-backend.git backend # Commit the submodule configuration git commit -m "Add platform submodules"

This creates a .gitmodules file:

[submodule "android"]

path = android

url = https://github.com/yourname/myapp-android.git

[submodule “ios”]

path = ios url = https://github.com/yourname/myapp-ios.git

[submodule “web”]

path = web url = https://github.com/yourname/myapp-web.git

[submodule “backend”]

path = backend url = https://github.com/yourname/myapp-backend.git

# Add remote and push git remote add origin https://github.com/yourname/myapp-meta.git git push -u origin main

# Clone with all submodules in one command git clone --recursive https://github.com/yourname/myapp-meta.git # Or if you already cloned without --recursive: git clone https://github.com/yourname/myapp-meta.git cd myapp-meta git submodule init git submodule update

# Navigate to a submodule cd android # You're now in a regular Git repository git status git log # Create a feature branch git checkout -b feature/new-feature # Make changes, commit git add . git commit -m "Android: Implement new feature" # Push to the submodule's remote git push origin feature/new-feature # Return to parent repository cd .. # The parent repository notices the submodule changed git status # Shows: modified: android (new commits) # Commit the submodule pointer update git add android git commit -m "Update android submodule to latest feature branch" git push

# Update all submodules to latest commits on their tracked branches git submodule update --remote # Or update a specific submodule git submodule update --remote android # Commit the updated submodule pointers git add . git commit -m "Update submodules to latest versions" git push

Create a parent CLAUDE.md with navigation guidance:

# MyApp Meta Repository ## Working in This Repository When working in this repository, be aware of which subdirectory you're in: - Changes in `android/` affect the Android app repository - Changes in `ios/` affect the iOS app repository - Changes in `web/` affect the web app repository - Changes in `backend/` affect the backend repository Always check your current working directory before making changes. Use `pwd` to verify. ## Common Commands by Platform ### Android ```bash cd android ./gradlew assembleDebug ``` ### iOS ```bash cd ios pod install xcodebuild -workspace MyApp.xcworkspace -scheme MyApp -destination 'platform=iOS Simulator,name=iPhone 16' build ``` ### Web ```bash cd web npm install npm run dev ```

Create platform-specific CLAUDE.md files in each submodule with detailed build instructions, architecture notes, and troubleshooting tips.

Sometimes the parent repository will show submodule conflicts:

# Update submodules to the committed versions git submodule update --init --recursive # If there are conflicts, navigate to the submodule cd android # Resolve using standard Git workflow git fetch git merge origin/main # ... resolve conflicts ... git add . git commit # Return to parent and update pointer cd .. git add android git commit -m "Resolve android submodule conflicts"

# ❌ Wrong: Only committing in parent repo cd android git add . git commit -m "Add feature" cd .. git push # Submodule changes not pushed! # ✅ Correct: Push submodule first, then parent cd android git add . git commit -m "Add feature" git push origin main cd .. git add android git commit -m "Update android submodule" git push

When you enter a submodule, you might be in “detached HEAD” state (pointing to a specific commit, not a branch):

cd android git status # HEAD detached at abc1234 # ✅ Create a branch before making changes git checkout -b feature/my-work # Or checkout the main branch git checkout main

# After pulling the parent repository git pull # Submodules might still be at old commits # ✅ Update them: git submodule update --init --recursive

Pro tip: Create a Git alias:

git config --global alias.pullall '!git pull && git submodule update --init --recursive' # Now use: git pullall

| Feature | Separate Repos | Symlinks | Monorepo | Git Submodules |

|---|---|---|---|---|

| Independent versioning | ✅ | ✅ | ❌ | ✅ |

| Unified workspace | ❌ | ✅ | ✅ | ✅ |

| Cross-platform | ✅ | ⚠️ (issues on Windows) | ✅ | ✅ |

| Version consistency tracking | ❌ | ❌ | ✅ | ✅ |

| Easy team onboarding | ❌ | ❌ | ✅ | ✅ |

| Independent CI/CD | ✅ | ✅ | ⚠️ (requires setup) | ✅ |

| IDE support | ✅ | ⚠️ (confusing) | ✅ | ✅ |

| Claude Code friendly | ❌ | ⚠️ | ✅ | ✅ |

Git submodules provide an elegant solution for managing multi-platform projects without sacrificing repository independence. They’re especially powerful when combined with AI coding assistants like Claude Code, which can leverage the unified workspace while respecting repository boundaries.

While submodules have a learning curve, the benefits far outweigh the initial complexity—especially for teams building cross-platform applications with distinct platform requirements and release cycles.

Use Git Submodules when:

Stick with Separate Repositories when:

Consider a Monorepo when:

Note: This blog post was written by Claude AI (Anthropic’s coding assistant), but is based on the real-world experience of managing the “Math Get To 24” cross-platform game project. The pain points, solutions, and best practices described here come from actual development work across Android (Java/Gradle), iOS (Objective-C/Swift/CocoaPods), Flutter web (Dart), and Firebase backend repositories. The tutorial reflects production-tested workflows that have improved developer productivity and onboarding experience.

I have worked in high tech for 30 years, building products for public companies and training thousands of software engineers to be productive.

In 202, I have tutored 331 hours with tutor middle school, highschool, college students in computer science.

My most recent students are adults who are not computer engineers but they want to use tools such as Cursor, Windsurf, Claude code to build websites and apps because they have an idea and they heard that Vibe Coding is possible for them.

One such example is someone who wanted to build a desktop app to take an image and the app will do automatic dimensioning. Within an hour I helped him install and setup Claude Code. We launched Claude Code inside of the terminal and I guide him to first create a product requirements document (PRD) for the product he wanted.

Then I asked him to prompt the AI agent to create an engineering implmentation plan. Given these two documents, AI has all the context it needs.

The next prompt is to just to use ‘@’ to mention the implementation.md file and after just two prompts to following the @implementation.md, my student had a fulling working Python GUI application that will do automated edge detection and create a measurement overlay.

The level of quality after only 2 prompts using Claude Code surprised even me who has been been using Claude Code for over 5 months on a daily basis.

If you are someone who is thinking about building a custom app for yourself, here are my suggestions

1) Use Claude Code, $20/ month is well worth the cost

2) Build something that you will use every day, otherwise you will not have the motivation to continue

3) Be ready to spend at least 40 hours prompting and learning about the capabilities of AI coding and more importantly the limitation

4) My rule of thumb is that it will take you 40 hours to build a small app, 80 hours to build a medium size app.

Keep building!

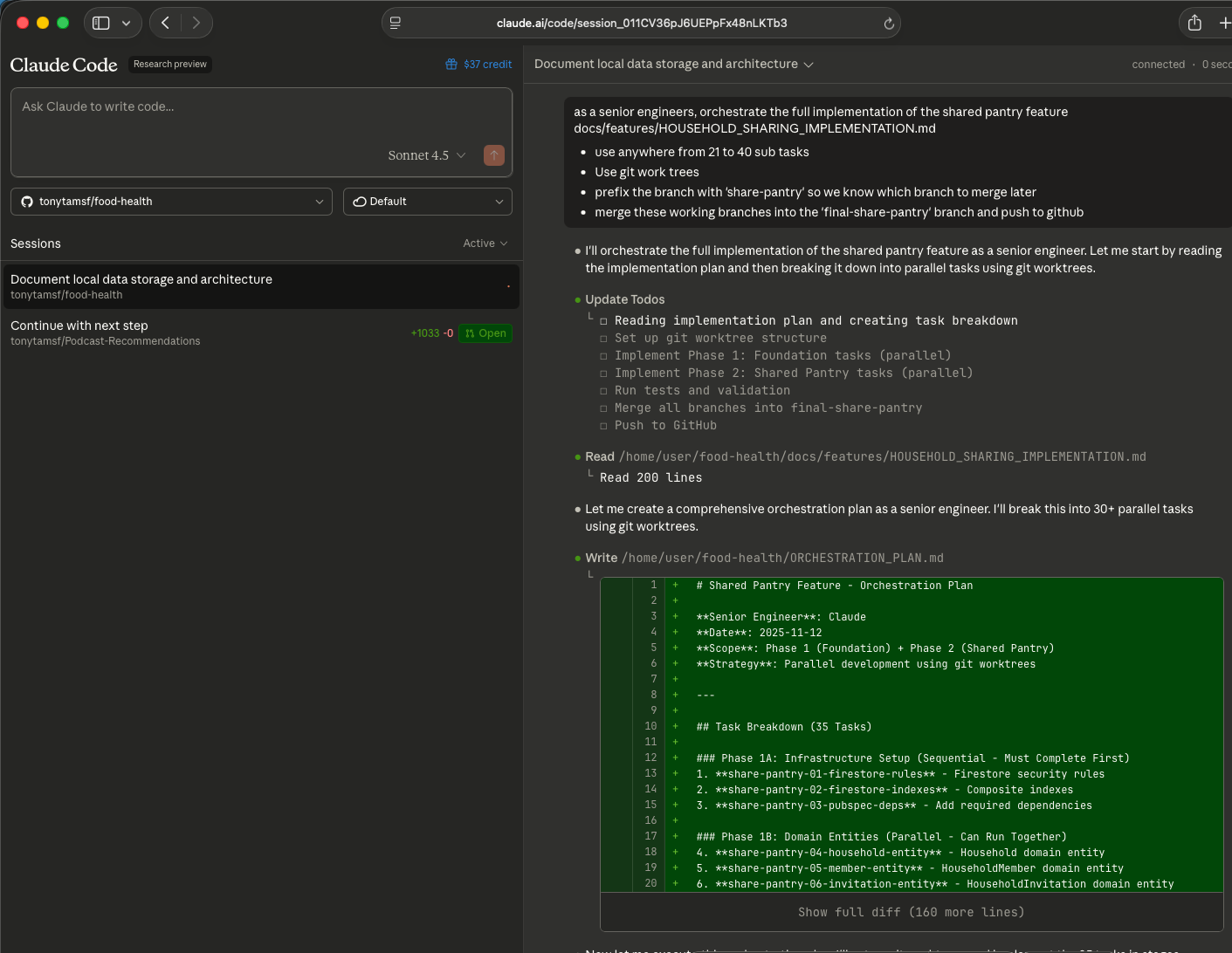

Today at 3:30pm, I fired off this prompt to Claude Code For Web, fingers crossed.

As a senior engineer, orchestrate the full implementation of the shared pantry feature docs/features/HOUSEHOLD_SHARING_IMPLEMENTATION.md

On this birthday, I gift myself the space, time and resources to learn. Recognizing the uncomfortable space in stomach when I’m stretching myself to learn something new. The fear of failing in front of someone while I’m pushing my limits. Then come the break through when I’ve finally master a new skill, that feeling of growth, reaching a new step and looking and seeing what’s beyond from a new vantage point.

In the everyday moments, I would like to remind my future self that there is something to be learned. When talking with an artist in the Holualoa Kona Coffee Festival, learning about how she works with block prints and getting multiple colors printed, I’m in awe of something new I learned.

When I tutor a college student who is learning C, I learn about how they misunderstand pointers that come so naturally to me after looking at C code for 30 years. ( thinking that int * char name; the ‘*’ is not part of the ‘int *’ but the ‘*’ is associated with ‘*name’ and it completely changed her understanding)

I learned a little bit about growing cocoa growing by looking at our own tress and the little cocoa babies that are able to grow from the hundreds of flowers.

Here is my birthday week celebration!

I have been using AI Coding tools daily to prototype and launch productive level apps and websites. I have also been tutoring non-computer science folks through my Wyzant tutoring business how how to use AI to product the app that they have always wish would exist in the world. One high school student is building a community golf app in New York. Another student is building a mental health app that is guided by a cute animated dragon.

I have lived through the dot-com bubble in the 2000s, and YES this generative AI period does feel like a bubble.

Ben Thompson wrote a fantastic piece of research on the The Benefits of Bubbles

if AI does nothing more than spur the creation of massive amounts of new power generation it will have done tremendous good for humanity. … To that end, I’m more optimistic today than I was even a week ago about the AI bubble achieving Perez-style benefits: power generation is exactly the sort of long-term payoff that might only be achievable through the mania and eventual pain of a bubble, and the sooner we start feeling the financial pressure — and the excitement of the opportunity — to build more power, the better.