Category: attention

-

Podcast: Jia Tolentino on what happens when life is an endless performanceJia Tolentino on what happens when life is an endless performance

The Ezra Klein Show: Best of: Jia Tolentino on what happens when life is an endless performance https://www.vox.com/ezra-klein-show-podcast

-

Single Tasking Time & Attention

So Far I’ve deleted all my infinite scrolling apps (FB, Instagram, LinkedIn, Twitter). I’ve stopped reading online news and only read a printed Sunday paper. I’ve paid for products to remove ads (Cruncy Roll, YouTube, Pandora). Call To Action To Take Back Attention & Time Today, I listened to the founder of Center For Humane…

-

Reading – Thinking – Writing

I have recently started a media detox program of not reading online news, online blogs, social networks (Twitter, LinkedIn, FB, Instagram). Instead I am focusing on listening to interview based podcasts and reading physical books. During this journey of reassessing how I spend my attention and time, I also started powering off my cell phone…

-

“Never compare your Google searches to everyone else’s social media posts”

I learned something new today. From page 160 of the book “Everybody Lies”. A great reminder to “Never compare your insides to everyone else’s outsides” You can read more here from author Seth Stephens – Davidowtiz (bio) and the op-ed pieces he wrote for NYTimes Don’t Let Facebook Make You Miserable

-

Delete Google Activities

Taking Back Control Of My Privacy Goodbye Infinite Scroll Media : LinkedIn App Goodbye Twitter @ tonytam Friends Don’t Let Friends’ Data Be Stolen #deleteAllFacebook (Instagram,WhatsApp) Life Strategy #1: Why I Am Only Reading Long Articles and Books Google Activities This is the hardest one yet. I love the convenience of Google maps, suggestion browsing…

-

How I Optimize

Recently I have made choices on how I spend my time and this has helped me decide what I say YES to and more importantly what I say NO to Optimize for time over money If I had to choose between taking muni or Lyft to work, I choose Lyft. Muni takes 45 minutes for…

-

-

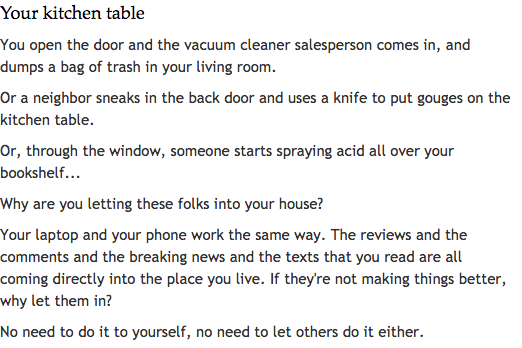

link: Seth Godin Your kitchen table

Taking Back Attention I’m going on a detox program with media Goodbye Infinite Scroll Media : LinkedIn App Goodbye Twitter @ tonytam Friends Don’t Let Friends’ Data Be Stolen #deleteAllFacebook (Instagram,WhatsApp) Thank you brain trust member : Seth Godin After I have done my detox, I find this gem in my email Your kitchen table…

-

Goodbye Infinite Scroll Media : LinkedIn App

I have removed Facebook I have removed Twitter Feb 8th, 2018 – Today I have removed the LinkedIn app from my phone. It was a difficult decision because I have been using the LinkedIn app to catch up posting from my professional connections as well as messaging people on LinkedIn. Why? I’m trying to read…

-

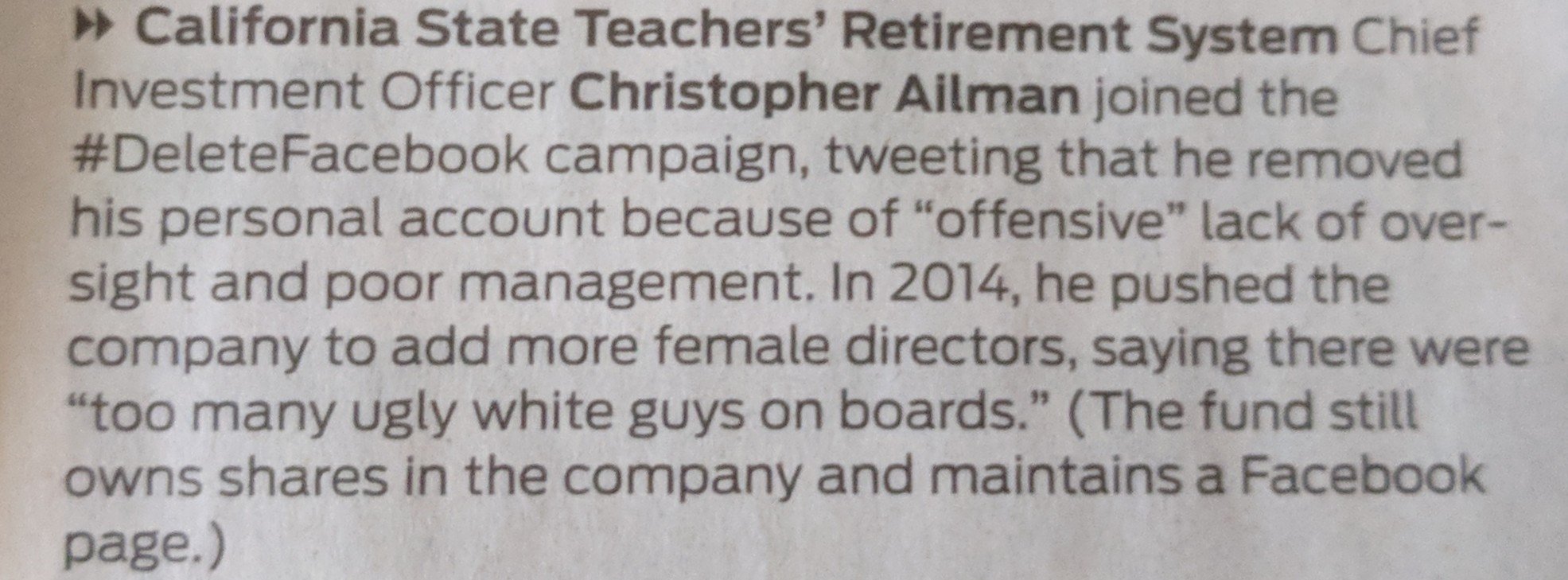

Friends Don’t Let Friends’ Data Be Stolen #deleteAllFacebook (Instagram,WhatsApp)

On March 27th, 2018, I’ve decided to remove all my accounts on the following Facebook-owned apps: Facebook, Instagram and WhatsApp. Facebook.com – This is easy because I only have 1 connection. Instagram – This is a little harder because I use the message feature to talk to 2 people on iOS devices. So now we…